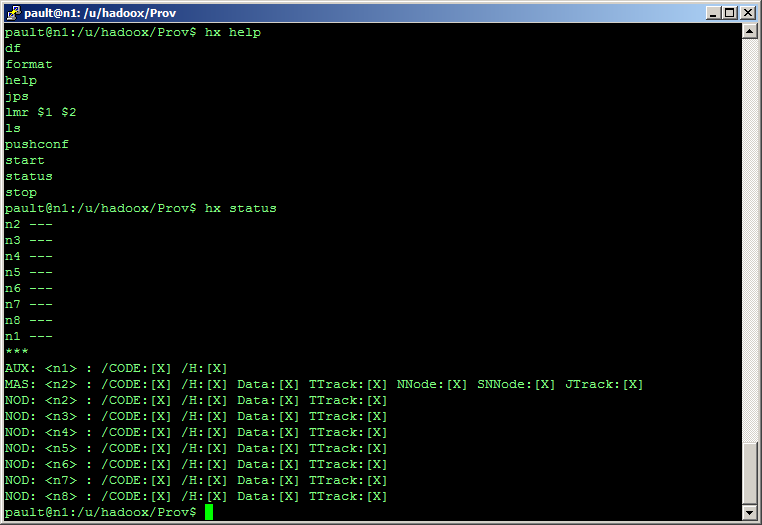

Hadoop Etudes : #1 : TableT

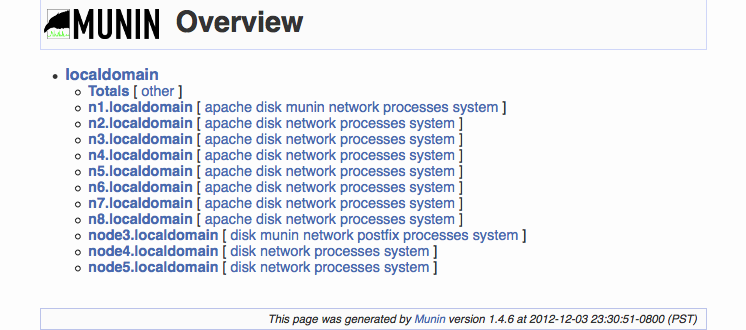

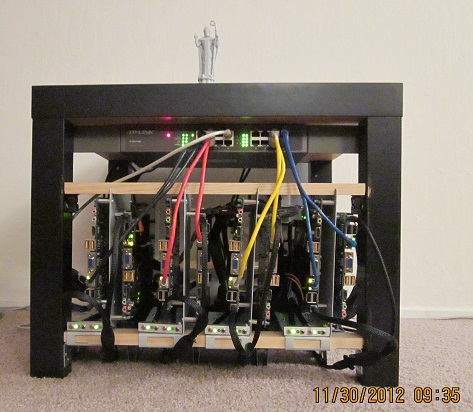

DataCenter for $2K

To run Hadoop we need a DataCenter, so let's build it

- A table from IKEA for $9, bunch of laptop hard drives, bunch of MiniITX mother boards (fanless), 2 UPS-es, some cables, some drilling

- 8 units

- No noise. No heat

- OS is Ubuntu

- It's not a toy

November 03. 2012 - December 03. 2012

Background

-

The Mini-Cluster

Early supercomputers used parallel processing and distributed computing and to link processors together in a single machine. Using freely available tools, it is possible to do the same today using inexpensive PCs - a cluster. Glen Gardner liked the idea, so he built himself a massively parallel Mini-ITX cluster using 12 x 800Mhz nodes.

-

Homemade "Helmer" Linux Cluster

I then found a number of people on the Web (in particular, Janne and Tim Molter) who have each built a Linux cluster into an Ikea "Helmer" file cabinet at a fairly low cost (around 2,500 USD).

-

Data Center In A Box

Few weeks ago, I was thinking to make an HPCC in a box for myself, based on the idea given by Douglas Eadline, on his website [[1]]. Later, while dealing with some problems of one of my clients, I decided to design an inexpensive chassis, which could hold in-expensive "servers" . My client's setup required multiple Xen/KVM hosts running x number of virtual machines. The chassis is to hold everything, from the cluster mother-boards, to their power supplies, network switches, power cables, KVM, etc.

-

Mini-ITX cluster

Here is a question: why? - Because we can!